Chat Completions: Revolutionizing System Design and User Interaction

Since its public launch in November 2022, ChatGPT has captured global attention by demonstrating the extraordinary potential of Large Language Models (LLM). Generating human-like responses to a wide range of requests, from practical queries to surreal prompts, ChatGPT has drafted cover letters, composed poetry, and even pretended to be William Shakespeare. This revolutionary technology is changing how we design systems and interact with computers, offering a more natural and intuitive way for users to communicate with machines.

The impact of these tools extends beyond mere novelty. They are reshaping the landscape of user interaction by providing seamless, conversational interfaces that can understand and respond to complex human inputs. This evolution presents a significant design challenge: creating contextually rich experiences that feel natural and engaging for users. By integrating chat completions into applications, we can enhance accessibility, streamline workflows, and revolutionize the way we work and communicate.

The Chat Completion interface demands a shift in how designers and prompt engineers approach system design. Instead of static and linear interactions, these interfaces require dynamic and context-aware communication strategies. Designers must anticipate a wide array of user inputs and craft responses that are not only accurate but also contextually relevant. This involves understanding user intent, maintaining conversational flow, and adapting to the user's needs in real-time.

The Future of System Design and User Interaction

Chat Completions transforming the way we work by enhancing efficiency, effectiveness, and speed. AI-powered chat completions optimize writing tasks, generating essays, memos, and other content. As we embrace these technologies, our challenge is to develop tools that aligns with human goals and educate users on their ethical use. Just as calculators didn't replace math education but became powerful tools, AI-mediated communication will continue to evolve, supporting our work while requiring thoughtful implementation.

- Traditional Interfaces to Dynamic Communication

- The advent of chat completions is ushering in a new era of system design. Traditional interfaces are giving way to more dynamic and interactive modes of communication. Users now expect systems to understand natural language inputs and provide contextually relevant responses. This shift necessitates a redesign of user interfaces to accommodate conversational agents, making interactions more fluid and intuitive.

- Enhancing Accessibility

- These advancements are enhancing accessibility, enabling users who may not be familiar with technical jargon or complex interfaces to interact with systems effortlessly. The democratization of technology through conversational AI is making powerful tools available to a broader audience, fostering inclusivity and engagement.

- Integration and Evolution

- The integration of chat completions into various applications will continue to evolve. From customer support to personal assistants and beyond, the ability of AI to converse naturally and meaningfully with users will redefine our relationship with technology. The future of system design lies in creating seamless, user-centric interactions that leverage the full potential of AI-driven communication.

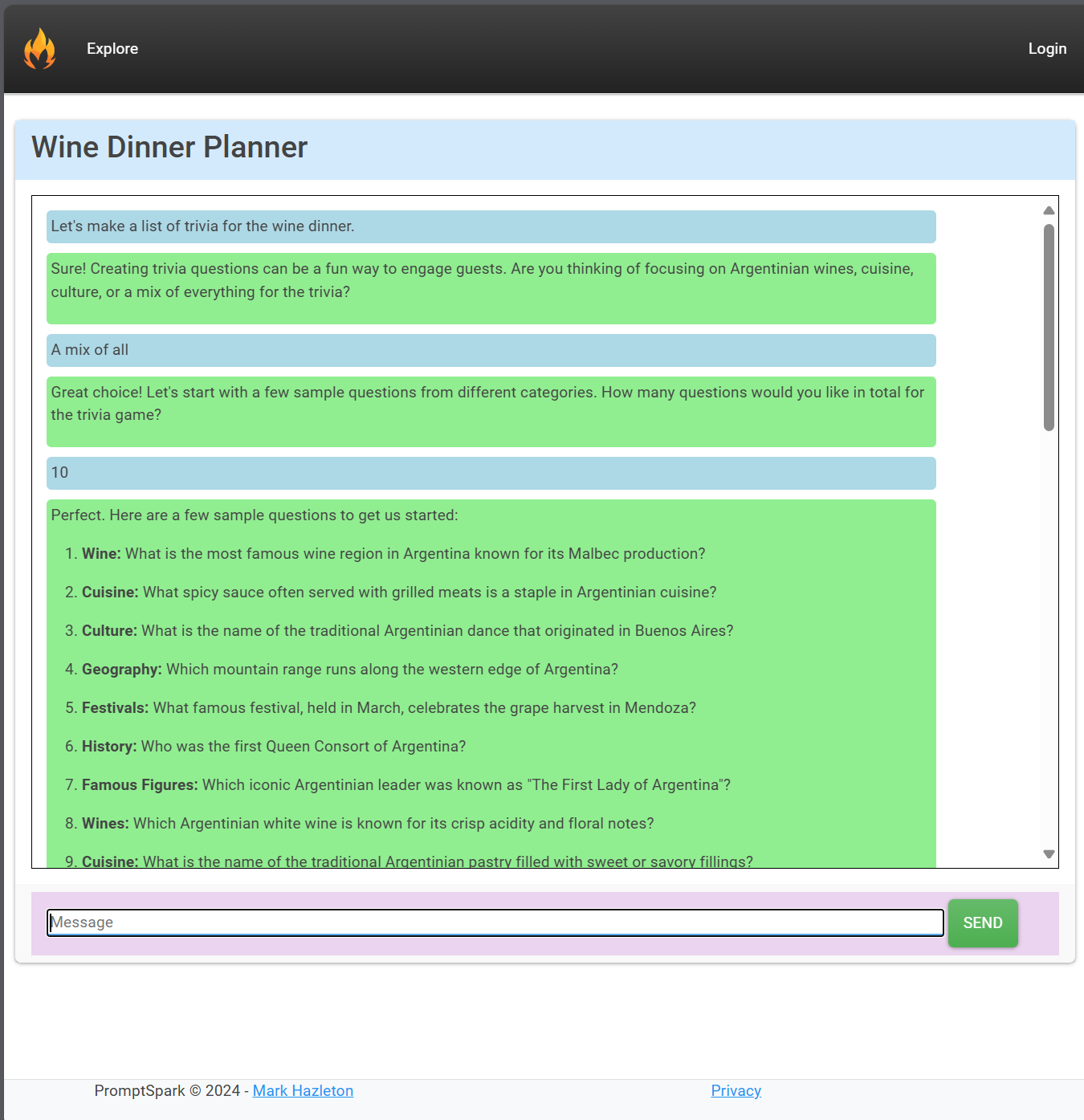

Chat Completions in Prompt Spark

Chat Completion was added to the Prompt Spark project to demonstrate a chat, co-pilot, or assistant interface. This integration allows users to converse with spark variants derived from a given Core Spark, providing a practical and insightful way to evaluate their performance.

Microsoft.SemanticKernel NuGet Package Learn More

The Microsoft Semantic Kernel is an open-source SDK designed to help developers build AI agents by combining existing code with AI models from various providers like OpenAI, Azure, and Hugging Face. It functions as an AI orchestration layer, enabling the creation of agents that can automate tasks by calling existing code. This SDK allows for extensibility through plugins and connectors, making it easy to integrate various AI services and automate complex business processes.

The Microsoft.SemanticKernel NuGet package offers an innovative SDK designed to integrate advanced Large Language Models (LLMs) with conventional programming languages such as C#, Python, and Java. This package provides developers with the tools to seamlessly incorporate AI-driven functionalities into their applications, leveraging the capabilities of platforms like OpenAI, Azure OpenAI, and Hugging Face.

- Key Features and Functionality

-

- Plugins

- Semantic Kernel allows you to define and integrate plugins easily. These plugins can be described with simple attributes, enabling AI models to understand and use them effectively.

- Automatic Orchestration

- The SDK includes advanced orchestration capabilities, where it can automatically generate and execute plans using AI, significantly reducing the manual coding effort required to achieve complex tasks.

- Multilingual Support

- The package supports multiple languages, offering robust functionality in C#, Python, and Java. This ensures flexibility and accessibility for a wide range of developers, regardless of their preferred programming language.

- Ease of Use

- Developers can quickly start using Semantic Kernel by installing the package and following straightforward setup instructions. Whether using .NET, Python, or Java, the initial setup involves adding the package, configuring API keys, and integrating basic sample code.

- Interactive Learning

- For hands-on learning, the SDK provides Jupyter notebooks that showcase usage examples and code snippets, helping developers to quickly grasp the core concepts and functionalities of Semantic Kernel.

Adding Chat to Prompt Spark

- Background: Prompt Spark Solution

-

Prompt Spark is designed to help developers and businesses optimize the use of LLM models by effectively managing, tracking, and comparing system prompts. Here are the key components of the Prompt Spark solution:

- Core Sparks

Core Sparks define the essential behavior and output expectations for LLMs. They establish rigorous requirements and guidelines to ensure consistency and quality across all interactions.

- Spark Variants

A Spark Variant is an LLM implementation of a Core Spark. Variants are used to compare responses and conduct in-depth testing and analysis of different implementations of the same Core Spark.

- User Prompts

User Prompts are collections of test inputs designed for a Core Spark. These prompts are systematically run against various Variants to assess their effectiveness and adherence to the Core Spark specifications.

- Building the ChatCompletionController

-

The ChatCompletionController serves as a pivotal element in the Prompt Spark project, enabling real-time, AI-driven conversations with different LLM Variants. Here’s a step-by-step breakdown of its implementation:

- Controller Setup

-

The `ChatCompletionController` setup in the Prompt Spark project is designed to initialize and configure essential components through dependency injection. By injecting services the controller gains access to HTTP context for session management, real-time client communication, chat completion functionalities, GPT definitions, and logging capabilities. This setup ensures that the controller can efficiently manage chat interactions, storing and retrieving necessary data, and sending real-time updates to clients.

This modular approach enhances the maintainability and testability of the application, as each service can be easily replaced or mocked during testing. It promotes a clear separation of concerns, where each service handles a specific aspect of the controller's operations, contributing to a scalable architecture. This configuration aligns with the goals of Prompt Spark, providing a robust framework for managing and comparing LLM interactions, ensuring consistency and quality in AI-driven chat functionalities.

public class ChatCompletionController( IHttpContextAccessor _httpContextAccessor, IHubContext<ChatHub> hubContext, IChatCompletionService _chatCompletionService, IGPTDefinitionService definitionService, ILogger<ChatCompletionController> logger) : OpenAIBaseController - Index Action

-

The `Index` action in the `ChatCompletionController` serves as the initial entry point for setting up a chat session based on a specific GPT definition. When a request is made to this action with an optional `id` parameter, it checks if the `id` is zero and redirects to the default chat view if necessary. If a valid `id` is provided, it retrieves the corresponding GPT definition using the `IGPTDefinitionService` and serializes this information into a session variable. This setup ensures that subsequent requests within the session can access the necessary GPT definition details, facilitating a seamless chat experience.

The purpose of this action is to prepare the chat environment by loading the appropriate definition data, which includes the core prompts and configurations for the chat session. By storing the `DefinitionDto` in the session, the application maintains stateful information that can be used across multiple interactions, enhancing the user experience by ensuring that each chat session is contextually aware and properly configured based on the selected GPT definition. This approach aligns with Prompt Spark's goal of optimizing and managing LLM interactions efficiently.

public async Task<IActionResult> Index(int id = 0) { if (id == 0) Response.Redirect("/OpenAI/Chat"); var definitionDto = await definitionService.GetDefinitionDtoAsync(id); var session = _httpContextAccessor.HttpContext.Session; session.SetString("DefinitionDto", JsonConvert.SerializeObject(definitionDto)); return View(definitionDto); } - SendMessage Action

-

The `SendMessage` action in the `ChatCompletionController` processes user messages, managing the conversation flow between the user and the AI system. It retrieves the stored `DefinitionDto` from the session, which contains the core prompts and configurations for the chat. The action then constructs the chat history by incorporating system messages and parsing the conversation history provided in the request. This setup ensures that the AI has the necessary context to generate appropriate responses.

The primary function of this action is to facilitate real-time communication using SignalR. It streams the AI's responses to the client, converting the content to HTML format before sending it. The action also includes robust error handling, logging any issues that occur during the process. This approach ensures a dynamic and responsive chat experience, aligning with Prompt Spark's objective of optimizing LLM interactions by maintaining context and providing immediate feedback to users.

[HttpPost] public async Task<IActionResult> SendMessage([FromForm] string message, [FromForm] string conversationHistory) { var session = _httpContextAccessor.HttpContext.Session; var definitionDtoJson = session.GetString("DefinitionDto"); var definitionDto = JsonConvert.DeserializeObject<DefinitionDto>(definitionDtoJson); if (!string.IsNullOrEmpty(message)) { var chatHistory = new ChatHistory(); chatHistory.AddSystemMessage(definitionDto.Prompt); chatHistory.AddSystemMessage("You are in a conversation, keep your answers brief, always ask follow-up questions, ask if ready for full answer."); var messages = JsonConvert.DeserializeObject<List<string>>(conversationHistory); for (int i = 0; i < messages.Count; i++) { if (i % 2 == 0) { chatHistory.AddUserMessage(messages[i]); } else { chatHistory.AddSystemMessage(messages[i]); } } try { var buffer = new StringBuilder(); await foreach (var response in _chatCompletionService.GetStreamingChatMessageContentsAsync(chatHistory)) { if (response?.Content != null) { buffer.Append(response.Content); if (response.Content.Contains('\n')) { var contentToSend = buffer.ToString(); var htmlContent = Markdown.ToHtml(contentToSend); await hubContext.Clients.All.SendAsync("ReceiveMessage", "System", htmlContent); buffer.Clear(); } } } if (buffer.Length > 0) { var remainingContent = buffer.ToString(); var htmlContent = Markdown.ToHtml(remainingContent); await hubContext.Clients.All.SendAsync("ReceiveMessage", "System", htmlContent); } } catch (Exception ex) { logger.LogError(ex, "Error occurred while processing the request"); } return Ok(); } logger.LogError("Invalid input"); return BadRequest("Invalid input"); }

- Benefits of Chat Completion in Prompt Spark

-

- Enhanced Interactivity

- By leveraging real-time chat functionalities, users can interact dynamically with different LLM variants, providing immediate feedback and insights.

- Improved Testing and Evaluation

- The ability to converse with multiple LLM variants based on a single Core Spark allows for comprehensive testing and performance comparison, ensuring adherence to quality standards.

- Efficient Prompt Management

- Storing and managing conversation histories and definition DTOs within session states streamline the process of tracking and analyzing interactions.

Integrating Chat Completion into the Prompt Spark project demostrates a new capability to manage and evaluate LLM interactions. This demonstrates the practical implementation of a chat interface but also provides a valuable tool for developers to optimize their use of LLM models throgh prompt engineering.

Visit Prompt Spark to explore its capabilities and elevate your prompt engineering projects. Explore Prompt Spark - LLM Prompt Managment Tool